I'm so excited that stanford is offering free online version of machine learning course this year, where you get to participate online like a student, finish the assignments(in time) and give the exams.

Linear algebra is one must have prerequisite for ML. I've done a course on same while I was in college. But, at that time, I learned more of calculations in linear algebra(multiplying matrices, calculating row reduced echelon form, calculating eigenvalues etc) and less of concepts. Now, the time has come when I really need to "understand" what those computations mean and what is the significance.

So, I watched some video lectures from MIT OCW Linear algebra course. I definitely recommend these lectures to everyone looking for a solid introduction to the topic.

As always, for better understanding and quick review, I took some notes. They can be found here.

Thursday, September 15, 2011

Monday, July 18, 2011

the XOR trick

XOR is one of the standard bitwise operation. Here is the truth table for same..

1 XOR 0 = 1

0 XOR 1 = 1

0 XOR 0 = 0

1 XOR 1 = 0

The trick that XOR provides is that if you take XOR of two N bit numbers(let say c = a XOR b), which is another N bit number then given one number(a or b) and the XOR result(c) you can determine the other number.

Basically( it can be easily proved by using truth tables)

a XOR (a XOR b) = b

b XOR (a XOR b) = a

This trick can be used to, for example, swap two integers without using a temporary variable.

Let say you wanted to swap x and y. Here is the required sequence.

x = x XOR y

y = x XOR y (y becomes old x)

x = x XOR y (x becomes old y)

In another use of this trick, you can implement doubly-linked list from a singly linked list without storing two pointers(prev, next) but just one(prev XOR next).

1 XOR 0 = 1

0 XOR 1 = 1

0 XOR 0 = 0

1 XOR 1 = 0

The trick that XOR provides is that if you take XOR of two N bit numbers(let say c = a XOR b), which is another N bit number then given one number(a or b) and the XOR result(c) you can determine the other number.

Basically( it can be easily proved by using truth tables)

a XOR (a XOR b) = b

b XOR (a XOR b) = a

This trick can be used to, for example, swap two integers without using a temporary variable.

Let say you wanted to swap x and y. Here is the required sequence.

x = x XOR y

y = x XOR y (y becomes old x)

x = x XOR y (x becomes old y)

In another use of this trick, you can implement doubly-linked list from a singly linked list without storing two pointers(prev, next) but just one(prev XOR next).

Friday, July 8, 2011

How to be a good (algorithmic) problem solver

Over the years I have worked with many algorithmic problems and there is definitely a pattern in the approach for solving them. I would like to note down my current approach of solving them and many books talk about similar stuff.

0. Solve by bruteforce(or backtracking)

If the problem size is small and bruteforce approach is ok, then do it and not worry too much about the efficient solution. Make sure you do some back of the envelop runtime cost calculations before you disregard bruteforce. In some problems, you might need an intelligent algorithm to solve a sub-problem and then a bruteforce approach to combine sub-problem solutions. This is especially true for some of the problems in code competitions.

now on to the ways of finding efficient solutions....

1. Solve by Example or solving problem instances of small sizes, n = 1,2,3..

Here we solve some example inputs to the problem, e.g. if the problem input is a number n, then I will try to solve it manually for n = 1,2,3,4... and see if a solution clicks in my mind. This same approach can sometimes lead to solution involving recursion(and dynamic programming) described later.

2. Solve by Simplification

Here We try to remove/add some constraints from/to the problem that simplify it, solve the simpler version or a different but similar problem and see if it can be used to solve/understand the original problem.

3. Solve by Recursion

"Thinking Recursively" is an art. Best way to "get" recursion is to practice and read the literature on functional programming where recursion is one of the key concepts. I highly recommend working through SICP and the "wishful thinking" concept described there. For example, to get factorial(n) you will think like, make a wish that someone gives you the factorial(n-1) then how will you get factorial(n) using that of (n-1). Be wishful !!

One of the things I "got" over time is that, for recursion to work It is not necessary that

f(n) = g(f(n-1))

but all of the following could work also

f(n) = g(f(n-1),f(n-2))

f(n) = g(f(n-1),f(n-2),f(n-3))

....

f(n) = g(f(n-1),f(n-2),...f(1),f(0))

3.1 Divide and Conquer(for example standard solution to merge-sort) is another powerful problem solving technique which uses recursion. Here, you solve the original problem by "merging" the solutions of two or more disjoint sub-problems(which are solved recursively).

3.2 Dynamic Programming is an optimization to improve running time after you have a recursive strategy. Draw the recursion tree and see if same sub-problem is being solved multiple times. That is, you will notice that total number of distinct sub-problems to be solved is much less than the total number of sub-problems being solved in straightforward recursive implementation. (In cormen et al, it is called the overlapping sub-problems property).

In such cases, a lot of, redundant computation is saved by caching the solution of various sub-problems and reusing them when required again.

Solution can be implemented either top down recursive with a table to cache the sub-problem solutions or a bottom-up iterative where you solve the smaller sub-problems first and then solve the bigger using solution of smaller sub-problems.

Some problems solved by dynamic programming are optimization problems and they exhibit optimal substructure property, that basically means that optimal solution to bigger problem "contains" optimal solution to the smaller sub-problems.

Most of the time, proof by induction is used here to prove the correctness of algorithm.

4. Solve using Greedy approach

It is a algorithm technique where making locally optimal/correct decisions, *sometimes*, leads to globally optimal/correct solutions. Usually, you can get idea of the solution by solving small size instances of the problem. However, this idea may or may not be correct so it is *essential* to prove that your locally optimal strategy gives you globally optimal results too.

In most of the cases following proof strategies work for greedy algorithms..

- proof by induction

- proof by contradiction: it is sometimes combined with, proof by exchange argument. Here you assume some optimal solution S* and a solution S produced by your greedy algorithm. Using, exchange of elements in S* so that exchange either preserves the optimality score or makes it better, you transform S* to S and that essentially proves that S is either better or equally good as S*. And hence, your greedy strategy works.

- Anything else :)

5. Solve by Reduction

Here we try to reduce the given problem to another problem whose solution is well-known and many of the code challenges problems are of this nature. Some of the algorithms, which come up very frequently, and you should know them by heart(including their proof, and if you have problem reading proofs then you need to work through How to Prove it!). Knowing them also means having their concise/optimal implementation in your [muscle] memory.

Sorting Algorithms - Index Sort, Merge Sort, Quick Sort, Bucket Sort, Radix Sort .

Binary Search Algorithm .

Graph Algorithms:

Breadth First Search(BFS) - Its most popular usage is to find single source shortest path in unweighted graph .

Depth First Search(DFS) .

Note that, Checking Bipartiteness of an undirected graph have standard BFS and DFS based solutions which you should know. Also, either of them can be used to find connected components in an undirected graph.

Topological Sorting [lets you find shortest/longest paths in a DAG in linear time, can be used to solve problems where there are some dependencies/prerequisites and you are required to find a valid ordering]

Strongly Connected Components of directed graph

Finding Euler Path

Dijkstra's Algorithm - for single source shortest path in weighted graph .

Bellman-Ford Algorithm - for single source shortest path in weighted graph with negative edges.

Floyd-Warshall algorithm - for all pair shortest path .

Kruskal and Prim's algorithms - for finding minimum spanning tree .

Max Network Flow (Ford-Fulkerson Algorithm)

Knapsack Problem

Zero-Sum and Alpha-Beta Search

5. Solve by using Data-Structures

You should know how to use Array, Hash table, (Single and Doubly) Linked List traversal, Pre/Post/In order traversal of Binary [Search] tree, Stack and Queue.

Many a times a problem can be solved by inserting the input into one of these data structures and traversing(or retrieving data from) it in certain way.

I found Stack interesting in another way. Most of the problems that are solved by simple recursion are also solvable using Stack and it is no surprise as in case of recursion the stack is hidden in the programming language implementation.

Let me conclude by saying that above list of algorithms and data structures is by no means exhaustive. They are just the most important and basic ones which you should know in great detail including their implementation and proofs.

In my opinion, Part-II(Problem Solving) of AIMA consists of very important algorithms and problem solving techniques. They are mostly related to Graph search but, you will see that there are a lot of problems that can be reduced to Graph search. One should work through at least that part of the book at some point in his life.

Another thing, you should be comfortable with, is basic calculations of computing (time/memory) complexity, the Big-O notation and methods of solving recurrence relations(which come up when computing complexity of any recursive algorithm).

Next level would be to understand theory of NP completeness(and awareness of known hard problems e.g. traveling-salesman-problem and their approximate solutions). You might want to know about advanced data structures such as BTree, KDTree, Trie etc and other graph algorithms. But these, I believe, you don't need to understand in great detail but just the usage. It is impossible to remember all the algorithms. However, you need to be "aware" of as many known data structures and algorithms as possible.

Lastly, This is a journey.. farther you go, better you get.

[Update: July 25' 2011] Sometimes writing precise statement of the problem also helps.

Basically it means to describe your problem under following headings...

Problem Description

Input

Output

Constraints

[Update: July 28' 2011] Sometimes problem is easier(or more efficient) to solve when the input is *Sorted*( or *preprocessed* in general) .

Also there can be problems where finding a *Signature* helps. For example problems related to anagrams of a string are usually solved by finding the signature that is the count of individual characters in the word or just the lexicographical sort of the word.

[Update: Feb 3' 2013] When solving an algorithmic problems. You should convince yourself, with [in]formal proofs, of 3 things (in worst cases)

1. Your algorithm will always terminate.

2. It will always produce the correct solution.

3. What is the worst case running time? (should be polynomial)

However, when solving some NP-complete problem, then 2 can be relaxed in the sense that algorithm is good enough as long as it either computes approximately good solution(if you're ok with it) or correct solution to specific instances of the general problem, that you are interested in. This relaxation is must because, with very high probability, there is no efficient(..polynomial time) solution to general problem you're solving.

0. Solve by bruteforce(or backtracking)

If the problem size is small and bruteforce approach is ok, then do it and not worry too much about the efficient solution. Make sure you do some back of the envelop runtime cost calculations before you disregard bruteforce. In some problems, you might need an intelligent algorithm to solve a sub-problem and then a bruteforce approach to combine sub-problem solutions. This is especially true for some of the problems in code competitions.

now on to the ways of finding efficient solutions....

1. Solve by Example or solving problem instances of small sizes, n = 1,2,3..

Here we solve some example inputs to the problem, e.g. if the problem input is a number n, then I will try to solve it manually for n = 1,2,3,4... and see if a solution clicks in my mind. This same approach can sometimes lead to solution involving recursion(and dynamic programming) described later.

2. Solve by Simplification

Here We try to remove/add some constraints from/to the problem that simplify it, solve the simpler version or a different but similar problem and see if it can be used to solve/understand the original problem.

3. Solve by Recursion

"Thinking Recursively" is an art. Best way to "get" recursion is to practice and read the literature on functional programming where recursion is one of the key concepts. I highly recommend working through SICP and the "wishful thinking" concept described there. For example, to get factorial(n) you will think like, make a wish that someone gives you the factorial(n-1) then how will you get factorial(n) using that of (n-1). Be wishful !!

One of the things I "got" over time is that, for recursion to work It is not necessary that

f(n) = g(f(n-1))

but all of the following could work also

f(n) = g(f(n-1),f(n-2))

f(n) = g(f(n-1),f(n-2),f(n-3))

....

f(n) = g(f(n-1),f(n-2),...f(1),f(0))

3.1 Divide and Conquer(for example standard solution to merge-sort) is another powerful problem solving technique which uses recursion. Here, you solve the original problem by "merging" the solutions of two or more disjoint sub-problems(which are solved recursively).

3.2 Dynamic Programming is an optimization to improve running time after you have a recursive strategy. Draw the recursion tree and see if same sub-problem is being solved multiple times. That is, you will notice that total number of distinct sub-problems to be solved is much less than the total number of sub-problems being solved in straightforward recursive implementation. (In cormen et al, it is called the overlapping sub-problems property).

In such cases, a lot of, redundant computation is saved by caching the solution of various sub-problems and reusing them when required again.

Solution can be implemented either top down recursive with a table to cache the sub-problem solutions or a bottom-up iterative where you solve the smaller sub-problems first and then solve the bigger using solution of smaller sub-problems.

Some problems solved by dynamic programming are optimization problems and they exhibit optimal substructure property, that basically means that optimal solution to bigger problem "contains" optimal solution to the smaller sub-problems.

Most of the time, proof by induction is used here to prove the correctness of algorithm.

4. Solve using Greedy approach

It is a algorithm technique where making locally optimal/correct decisions, *sometimes*, leads to globally optimal/correct solutions. Usually, you can get idea of the solution by solving small size instances of the problem. However, this idea may or may not be correct so it is *essential* to prove that your locally optimal strategy gives you globally optimal results too.

In most of the cases following proof strategies work for greedy algorithms..

- proof by induction

- proof by contradiction: it is sometimes combined with, proof by exchange argument. Here you assume some optimal solution S* and a solution S produced by your greedy algorithm. Using, exchange of elements in S* so that exchange either preserves the optimality score or makes it better, you transform S* to S and that essentially proves that S is either better or equally good as S*. And hence, your greedy strategy works.

- Anything else :)

5. Solve by Reduction

Here we try to reduce the given problem to another problem whose solution is well-known and many of the code challenges problems are of this nature. Some of the algorithms, which come up very frequently, and you should know them by heart(including their proof, and if you have problem reading proofs then you need to work through How to Prove it!). Knowing them also means having their concise/optimal implementation in your [muscle] memory.

Sorting Algorithms - Index Sort, Merge Sort, Quick Sort, Bucket Sort, Radix Sort .

Binary Search Algorithm .

Graph Algorithms:

Breadth First Search(BFS) - Its most popular usage is to find single source shortest path in unweighted graph .

Depth First Search(DFS) .

Note that, Checking Bipartiteness of an undirected graph have standard BFS and DFS based solutions which you should know. Also, either of them can be used to find connected components in an undirected graph.

Topological Sorting [lets you find shortest/longest paths in a DAG in linear time, can be used to solve problems where there are some dependencies/prerequisites and you are required to find a valid ordering]

Strongly Connected Components of directed graph

Finding Euler Path

Dijkstra's Algorithm - for single source shortest path in weighted graph .

Bellman-Ford Algorithm - for single source shortest path in weighted graph with negative edges.

Floyd-Warshall algorithm - for all pair shortest path .

Kruskal and Prim's algorithms - for finding minimum spanning tree .

Max Network Flow (Ford-Fulkerson Algorithm)

Knapsack Problem

Zero-Sum and Alpha-Beta Search

5. Solve by using Data-Structures

You should know how to use Array, Hash table, (Single and Doubly) Linked List traversal, Pre/Post/In order traversal of Binary [Search] tree, Stack and Queue.

Many a times a problem can be solved by inserting the input into one of these data structures and traversing(or retrieving data from) it in certain way.

I found Stack interesting in another way. Most of the problems that are solved by simple recursion are also solvable using Stack and it is no surprise as in case of recursion the stack is hidden in the programming language implementation.

Let me conclude by saying that above list of algorithms and data structures is by no means exhaustive. They are just the most important and basic ones which you should know in great detail including their implementation and proofs.

In my opinion, Part-II(Problem Solving) of AIMA consists of very important algorithms and problem solving techniques. They are mostly related to Graph search but, you will see that there are a lot of problems that can be reduced to Graph search. One should work through at least that part of the book at some point in his life.

Another thing, you should be comfortable with, is basic calculations of computing (time/memory) complexity, the Big-O notation and methods of solving recurrence relations(which come up when computing complexity of any recursive algorithm).

Next level would be to understand theory of NP completeness(and awareness of known hard problems e.g. traveling-salesman-problem and their approximate solutions). You might want to know about advanced data structures such as BTree, KDTree, Trie etc and other graph algorithms. But these, I believe, you don't need to understand in great detail but just the usage. It is impossible to remember all the algorithms. However, you need to be "aware" of as many known data structures and algorithms as possible.

Lastly, This is a journey.. farther you go, better you get.

[Update: July 25' 2011] Sometimes writing precise statement of the problem also helps.

Basically it means to describe your problem under following headings...

Problem Description

Input

Output

Constraints

[Update: July 28' 2011] Sometimes problem is easier(or more efficient) to solve when the input is *Sorted*( or *preprocessed* in general) .

Also there can be problems where finding a *Signature* helps. For example problems related to anagrams of a string are usually solved by finding the signature that is the count of individual characters in the word or just the lexicographical sort of the word.

[Update: Feb 3' 2013] When solving an algorithmic problems. You should convince yourself, with [in]formal proofs, of 3 things (in worst cases)

1. Your algorithm will always terminate.

2. It will always produce the correct solution.

3. What is the worst case running time? (should be polynomial)

However, when solving some NP-complete problem, then 2 can be relaxed in the sense that algorithm is good enough as long as it either computes approximately good solution(if you're ok with it) or correct solution to specific instances of the general problem, that you are interested in. This relaxation is must because, with very high probability, there is no efficient(..polynomial time) solution to general problem you're solving.

Sunday, June 26, 2011

Yahoo Summer School on IR - 2011

Last week, Yahoo Research organized a summer school on IR at IISC, Bangalore campus. This is the first time I visited IISC, the campus is lush green all around and add to it the nice weather.

Most of the sessions on classical IR were fairly detailed(but fast paced). A lot of the discussion assumed upfront knowledge of basic probability theory and linear algebra, which is kind of ok if you are attending a session on any advanced computer science material.

I could easily comprehend the sessions on Introduction to IR, IR Models(Boolean Retrieval, Vector space model, BIRM & BM25, IR based on language model), IR system quality evaluation and also how to create test collections, index creation with a bit of index compression techniques. Along the way, I followed the first half(up to chapter - 12, Language models for IR) of IIR book which covers more or less the same material covered in the mentioned sessions.

Then the sessions on web retrieval did a good job of describing the challenges faced in the web retrieval. It covered a very high level shape of web graph, architecture of web IR and crawlers. This also gave details about how pagerank is typically calculated from the web graph.

Then there were sessions on multimedia retrieval and learning to rank which tried to cover too much ground and I could only get a very high level outline of the area.

Overall it was a good effort and provided a very good introduction to the field of IR. I wish they had covered more on dynamic indexing as in the real world documents get added, deleted and modified every moment.

And, Yahoo research definitely deserves much appreciation for organizing this summer school.

References:

Most of the slides from sessions are available with Yahoo labs.

Modern Information Retrieval is written by one of the presenters in the summer school. It was cited multiple times and sessions on web retrieval are directly from the book chapters.

Introduction to Information Retrieval is another well known book by the author of famous Foundations of statistical natural language processing.

Most of the sessions on classical IR were fairly detailed(but fast paced). A lot of the discussion assumed upfront knowledge of basic probability theory and linear algebra, which is kind of ok if you are attending a session on any advanced computer science material.

I could easily comprehend the sessions on Introduction to IR, IR Models(Boolean Retrieval, Vector space model, BIRM & BM25, IR based on language model), IR system quality evaluation and also how to create test collections, index creation with a bit of index compression techniques. Along the way, I followed the first half(up to chapter - 12, Language models for IR) of IIR book which covers more or less the same material covered in the mentioned sessions.

Then the sessions on web retrieval did a good job of describing the challenges faced in the web retrieval. It covered a very high level shape of web graph, architecture of web IR and crawlers. This also gave details about how pagerank is typically calculated from the web graph.

Then there were sessions on multimedia retrieval and learning to rank which tried to cover too much ground and I could only get a very high level outline of the area.

Overall it was a good effort and provided a very good introduction to the field of IR. I wish they had covered more on dynamic indexing as in the real world documents get added, deleted and modified every moment.

And, Yahoo research definitely deserves much appreciation for organizing this summer school.

References:

Most of the slides from sessions are available with Yahoo labs.

Modern Information Retrieval is written by one of the presenters in the summer school. It was cited multiple times and sessions on web retrieval are directly from the book chapters.

Introduction to Information Retrieval is another well known book by the author of famous Foundations of statistical natural language processing.

Saturday, April 30, 2011

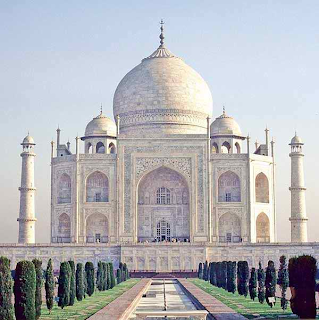

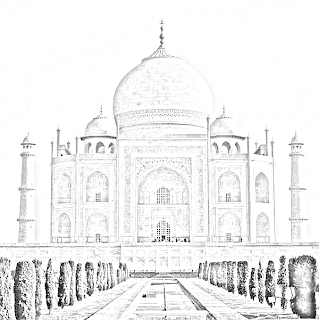

How I wrote [java]code to give Pencil Sketch effect to a picture

I am creating an application where I wanted to transform images to give them pencil sketch effect which can be easily obtained by photoshop as is described here.

However, I needed to automate the whole process in the code and certainly it is beyond me to look "inside" photoshop to see how it works(what are the algorithms/transformations etc and their implementations).

Anyway, from the article above it became clear that pseudocode looks like following:

s = Read-File-Into-Image("/path/to/image")

g = Convert-To-Gray-Scale(s)

i = Invert-Colors(g)

b = Apply-Gaussian-Blur(i)

result = Color-Dodge-Blend-Merge(b,g)

Now it was a matter of finding open source image-processing library that provided the operations I needed. By googling, I landed on JH Labs. They, freely, provide an image editor on which I tried to replicate the process described in photoshop article mentioned above and indeed I got the pencil sketch effect. Now, the best part is that image processing library behind JH Labs image editor is open source.

Then it was just a matter of coding the pseudocode using the JH labs library and here it is...

Here is how the image transform when I apply above code...

Notes:

- I used above code on png images. JH Labs code only works with TYPE_INT_ARGB(ref) images so you might need to transform them correctly.

- Code shown above was written to just check the concept and has no regards for performance, beauty.

Acknowledgement:

Well, to be honest, from being a alien to image-processing to getting to the code was not as linear a process as described in this post and I had to do a lot of hit n trial to get to this point. I want to thank Jerry who patiently answered my queries.

However, I needed to automate the whole process in the code and certainly it is beyond me to look "inside" photoshop to see how it works(what are the algorithms/transformations etc and their implementations).

Anyway, from the article above it became clear that pseudocode looks like following:

s = Read-File-Into-Image("/path/to/image")

g = Convert-To-Gray-Scale(s)

i = Invert-Colors(g)

b = Apply-Gaussian-Blur(i)

result = Color-Dodge-Blend-Merge(b,g)

Now it was a matter of finding open source image-processing library that provided the operations I needed. By googling, I landed on JH Labs. They, freely, provide an image editor on which I tried to replicate the process described in photoshop article mentioned above and indeed I got the pencil sketch effect. Now, the best part is that image processing library behind JH Labs image editor is open source.

Then it was just a matter of coding the pseudocode using the JH labs library and here it is...

BufferedImage src = null;

BufferedImage target = null;

src = ImageIO.read(new File("C:\\tmp\\ss.png"));

src = ImageUtils.convertImageToARGB(src);

//transformations begin=============

//gray scale

PointFilter grayScaleFilter = new GrayscaleFilter();

BufferedImage grayScale = new BufferedImage(src.getWidth(),src.getHeight(),src.getType());

grayScaleFilter.filter(src, grayScale);

//inverted gray scale

BufferedImage inverted = new BufferedImage(src.getWidth(),src.getHeight(),src.getType());

PointFilter invertFilter = new InvertFilter();

invertFilter.filter(grayScale,inverted);

//gaussian blurr

GaussianFilter gaussianFilter = new GaussianFilter(20);

BufferedImage gaussianFiltered = new BufferedImage(src.getWidth(),src.getHeight(),src.getType());

gaussianFilter.filter(inverted, gaussianFiltered);

//color dodge

ColorDodgeComposite cdc = new ColorDodgeComposite(1.0f);

CompositeContext cc = cdc.createContext(inverted.getColorModel(), grayScale.getColorModel(), null);

Raster invertedR = gaussianFiltered.getRaster();

Raster grayScaleR = grayScale.getRaster();

BufferedImage composite = new BufferedImage(src.getWidth(),src.getHeight(),src.getType());

WritableRaster colorDodgedR = composite.getRaster();

cc.compose(invertedR, grayScaleR , colorDodgedR);

//==================================

target = composite;

File outputfile = new File("C:\\tmp\\saved.png");

ImageIO.write(target, "png", outputfile);

Here is how the image transform when I apply above code...

Notes:

- I used above code on png images. JH Labs code only works with TYPE_INT_ARGB(ref) images so you might need to transform them correctly.

- Code shown above was written to just check the concept and has no regards for performance, beauty.

Acknowledgement:

Well, to be honest, from being a alien to image-processing to getting to the code was not as linear a process as described in this post and I had to do a lot of hit n trial to get to this point. I want to thank Jerry who patiently answered my queries.

Monday, April 18, 2011

How I revised Calculus

I have been following some stuff on machine learning and realized that over time I have forgotten some of the calculus stuff that I learned in college.

I mostly remembered single variable differentiation, integration, maxima/minima etc but could not recollect the precise meaning of limit, continuity, series convergence and also the taylor series and multivariable calculus stuff(partial derivatives, optimization of multivariable function, lagrange multipliers etc).

This note is the shortcut that I took to quickly recap all of the above.

First, I read following( relevant) chapters/sections from the Calculus book by Professor Gilbert Strang.

Section 2.6(Limits) and 2.7(Continuous Functions)

Chapter 10 (Infinite Series)

Chapter 11 (Vectors and Matrices)

Chapter 13 (Partial Derivatives)

I must say that the book is great and covers a lot of breadth maintaining enough depth. However, I could not fully grasp the contents of Chapter-13(Partial Derivatives), probably because of the 3d diagrams on 2d paper(or maybe I just could not pay enough attention).

In order to understand this chapter I followed all the video lectures on Partial Derivatives(Lecture-8 to Lecture-14) from MIT Multivariable calculus course. The video lectures are easier to follow and also explains diagrams done in applets which are much easier to understand. Also, the lecture notes give a very good summary of stuff covered in the lecture videos.

I mostly remembered single variable differentiation, integration, maxima/minima etc but could not recollect the precise meaning of limit, continuity, series convergence and also the taylor series and multivariable calculus stuff(partial derivatives, optimization of multivariable function, lagrange multipliers etc).

This note is the shortcut that I took to quickly recap all of the above.

First, I read following( relevant) chapters/sections from the Calculus book by Professor Gilbert Strang.

Section 2.6(Limits) and 2.7(Continuous Functions)

Chapter 10 (Infinite Series)

Chapter 11 (Vectors and Matrices)

Chapter 13 (Partial Derivatives)

I must say that the book is great and covers a lot of breadth maintaining enough depth. However, I could not fully grasp the contents of Chapter-13(Partial Derivatives), probably because of the 3d diagrams on 2d paper(or maybe I just could not pay enough attention).

In order to understand this chapter I followed all the video lectures on Partial Derivatives(Lecture-8 to Lecture-14) from MIT Multivariable calculus course. The video lectures are easier to follow and also explains diagrams done in applets which are much easier to understand. Also, the lecture notes give a very good summary of stuff covered in the lecture videos.

Wednesday, April 13, 2011

embedding activemq in jboss

Today, I worked on a prototype web application that utilized JMS. We decided to use ActiveMQ for this. Here are the things I had to do to get it running embedded inside Jboss.

First, I followed the process described in the doc "Integrating ActiveMQ with Jboss".

By default ActiveMQ uses KahaDB for persistence, but I wanted to use our oracle db infrastructure for this. A bit of googling got me to Introduction to ActiveMQ persistence and that led to JDBC data source configuration . By now I got the ActiveMQ successfully embedded in Jboss and running.

Next, Instead of giving all the oracle db info in the broker-config.xml, I wanted to get it from Jboss managed jdbc datasource(which is being used by other applications also). So, I have already got mydatasource-ds.xml deployed inside deploy/ folder of jboss which looks like following

In order to use above datasource, Here is what I had to put inside activemq-ra.rar/broker-config.xml file.

Well, above did not work. What happened was that Jboss was trying to start ActiveMQ before deploying mydatasource-ds.xml which resulted in JNDI NameNotFoundException(as java:/jdbc/mydatasource-ds is not available until mydatasource-ds.xml gets deployed). So, in ActiveMQ configuration, I had to declare dependency on mydatasource-ds(so that it gets deployed first). To do it, I created a file named jboss-dependency.xml inside activemq-ra.rar/META-INF/ and pasted following on it.

Now, We are good :).

BTW, above is by no means an exhaustive list because as we go, we will make a lot of changes for the actual setup but above should get a new person started with less amount of trouble than I had.

First, I followed the process described in the doc "Integrating ActiveMQ with Jboss".

By default ActiveMQ uses KahaDB for persistence, but I wanted to use our oracle db infrastructure for this. A bit of googling got me to Introduction to ActiveMQ persistence and that led to JDBC data source configuration . By now I got the ActiveMQ successfully embedded in Jboss and running.

Next, Instead of giving all the oracle db info in the broker-config.xml, I wanted to get it from Jboss managed jdbc datasource(which is being used by other applications also). So, I have already got mydatasource-ds.xml deployed inside deploy/ folder of jboss which looks like following

<datasources>

<local-tx-datasource>

<jndi-name>jdbc/mydatasource-ds</jndi-name>

<connection-url>jdbc:oracle:thin:oracle_user/passwordu@host:1521/sid</connection-url>

<driver-class>oracle.jdbc.driver.OracleDriver</driver-class>

<min-pool-size>1</min-pool-size>

<max-pool-size>20</max-pool-size>

<metadata>

<type-mapping>Oracle10g</type-mapping>

</metadata>

</local-tx-datasource>

</datasources>

In order to use above datasource, Here is what I had to put inside activemq-ra.rar/broker-config.xml file.

<beans ...>

<!-- shutdown hook is disabled as RAR classloader may be gone at shutdown -->

<broker xmlns="http://activemq.apache.org/schema/core" useJmx="true" useShutdownHook="false" brokerName="myactivemq.broker">

...

...

<persistenceAdapter>

<!-- kahaDB directory="activemq-data/kahadb"/ -->

<!--kahaDB directory="${jboss.server.data.dir}/activemq"/ -->

<jdbcPersistenceAdapter

dataDirectory="${jboss.server.data.dir}/activemq"

dataSource="#oracle-ds"/>

</persistenceAdapter>

...

</broker>

<bean id="oracle-ds" class="org.springframework.jndi.JndiObjectFactoryBean">

<property name="jndiName" value="java:jdbc/mydatasource-ds"></property>

</bean>

</beans>

Well, above did not work. What happened was that Jboss was trying to start ActiveMQ before deploying mydatasource-ds.xml which resulted in JNDI NameNotFoundException(as java:/jdbc/mydatasource-ds is not available until mydatasource-ds.xml gets deployed). So, in ActiveMQ configuration, I had to declare dependency on mydatasource-ds(so that it gets deployed first). To do it, I created a file named jboss-dependency.xml inside activemq-ra.rar/META-INF/ and pasted following on it.

<dependency xmlns="urn:jboss:dependency:1.0">

<item whenRequired="Real">jboss.jca:service=DataSourceBinding,name=jdbc/mydatasource-ds</item>

</dependency>

Now, We are good :).

BTW, above is by no means an exhaustive list because as we go, we will make a lot of changes for the actual setup but above should get a new person started with less amount of trouble than I had.

Subscribe to:

Posts (Atom)