I'm so excited that stanford is offering free online version of machine learning course this year, where you get to participate online like a student, finish the assignments(in time) and give the exams.

Linear algebra is one must have prerequisite for ML. I've done a course on same while I was in college. But, at that time, I learned more of calculations in linear algebra(multiplying matrices, calculating row reduced echelon form, calculating eigenvalues etc) and less of concepts. Now, the time has come when I really need to "understand" what those computations mean and what is the significance.

So, I watched some video lectures from MIT OCW Linear algebra course. I definitely recommend these lectures to everyone looking for a solid introduction to the topic.

As always, for better understanding and quick review, I took some notes. They can be found here.

Thursday, September 15, 2011

Monday, July 18, 2011

the XOR trick

XOR is one of the standard bitwise operation. Here is the truth table for same..

1 XOR 0 = 1

0 XOR 1 = 1

0 XOR 0 = 0

1 XOR 1 = 0

The trick that XOR provides is that if you take XOR of two N bit numbers(let say c = a XOR b), which is another N bit number then given one number(a or b) and the XOR result(c) you can determine the other number.

Basically( it can be easily proved by using truth tables)

a XOR (a XOR b) = b

b XOR (a XOR b) = a

This trick can be used to, for example, swap two integers without using a temporary variable.

Let say you wanted to swap x and y. Here is the required sequence.

x = x XOR y

y = x XOR y (y becomes old x)

x = x XOR y (x becomes old y)

In another use of this trick, you can implement doubly-linked list from a singly linked list without storing two pointers(prev, next) but just one(prev XOR next).

1 XOR 0 = 1

0 XOR 1 = 1

0 XOR 0 = 0

1 XOR 1 = 0

The trick that XOR provides is that if you take XOR of two N bit numbers(let say c = a XOR b), which is another N bit number then given one number(a or b) and the XOR result(c) you can determine the other number.

Basically( it can be easily proved by using truth tables)

a XOR (a XOR b) = b

b XOR (a XOR b) = a

This trick can be used to, for example, swap two integers without using a temporary variable.

Let say you wanted to swap x and y. Here is the required sequence.

x = x XOR y

y = x XOR y (y becomes old x)

x = x XOR y (x becomes old y)

In another use of this trick, you can implement doubly-linked list from a singly linked list without storing two pointers(prev, next) but just one(prev XOR next).

Friday, July 8, 2011

How to be a good (algorithmic) problem solver

Over the years I have worked with many algorithmic problems and there is definitely a pattern in the approach for solving them. I would like to note down my current approach of solving them and many books talk about similar stuff.

0. Solve by bruteforce(or backtracking)

If the problem size is small and bruteforce approach is ok, then do it and not worry too much about the efficient solution. Make sure you do some back of the envelop runtime cost calculations before you disregard bruteforce. In some problems, you might need an intelligent algorithm to solve a sub-problem and then a bruteforce approach to combine sub-problem solutions. This is especially true for some of the problems in code competitions.

now on to the ways of finding efficient solutions....

1. Solve by Example or solving problem instances of small sizes, n = 1,2,3..

Here we solve some example inputs to the problem, e.g. if the problem input is a number n, then I will try to solve it manually for n = 1,2,3,4... and see if a solution clicks in my mind. This same approach can sometimes lead to solution involving recursion(and dynamic programming) described later.

2. Solve by Simplification

Here We try to remove/add some constraints from/to the problem that simplify it, solve the simpler version or a different but similar problem and see if it can be used to solve/understand the original problem.

3. Solve by Recursion

"Thinking Recursively" is an art. Best way to "get" recursion is to practice and read the literature on functional programming where recursion is one of the key concepts. I highly recommend working through SICP and the "wishful thinking" concept described there. For example, to get factorial(n) you will think like, make a wish that someone gives you the factorial(n-1) then how will you get factorial(n) using that of (n-1). Be wishful !!

One of the things I "got" over time is that, for recursion to work It is not necessary that

f(n) = g(f(n-1))

but all of the following could work also

f(n) = g(f(n-1),f(n-2))

f(n) = g(f(n-1),f(n-2),f(n-3))

....

f(n) = g(f(n-1),f(n-2),...f(1),f(0))

3.1 Divide and Conquer(for example standard solution to merge-sort) is another powerful problem solving technique which uses recursion. Here, you solve the original problem by "merging" the solutions of two or more disjoint sub-problems(which are solved recursively).

3.2 Dynamic Programming is an optimization to improve running time after you have a recursive strategy. Draw the recursion tree and see if same sub-problem is being solved multiple times. That is, you will notice that total number of distinct sub-problems to be solved is much less than the total number of sub-problems being solved in straightforward recursive implementation. (In cormen et al, it is called the overlapping sub-problems property).

In such cases, a lot of, redundant computation is saved by caching the solution of various sub-problems and reusing them when required again.

Solution can be implemented either top down recursive with a table to cache the sub-problem solutions or a bottom-up iterative where you solve the smaller sub-problems first and then solve the bigger using solution of smaller sub-problems.

Some problems solved by dynamic programming are optimization problems and they exhibit optimal substructure property, that basically means that optimal solution to bigger problem "contains" optimal solution to the smaller sub-problems.

Most of the time, proof by induction is used here to prove the correctness of algorithm.

4. Solve using Greedy approach

It is a algorithm technique where making locally optimal/correct decisions, *sometimes*, leads to globally optimal/correct solutions. Usually, you can get idea of the solution by solving small size instances of the problem. However, this idea may or may not be correct so it is *essential* to prove that your locally optimal strategy gives you globally optimal results too.

In most of the cases following proof strategies work for greedy algorithms..

- proof by induction

- proof by contradiction: it is sometimes combined with, proof by exchange argument. Here you assume some optimal solution S* and a solution S produced by your greedy algorithm. Using, exchange of elements in S* so that exchange either preserves the optimality score or makes it better, you transform S* to S and that essentially proves that S is either better or equally good as S*. And hence, your greedy strategy works.

- Anything else :)

5. Solve by Reduction

Here we try to reduce the given problem to another problem whose solution is well-known and many of the code challenges problems are of this nature. Some of the algorithms, which come up very frequently, and you should know them by heart(including their proof, and if you have problem reading proofs then you need to work through How to Prove it!). Knowing them also means having their concise/optimal implementation in your [muscle] memory.

Sorting Algorithms - Index Sort, Merge Sort, Quick Sort, Bucket Sort, Radix Sort .

Binary Search Algorithm .

Graph Algorithms:

Breadth First Search(BFS) - Its most popular usage is to find single source shortest path in unweighted graph .

Depth First Search(DFS) .

Note that, Checking Bipartiteness of an undirected graph have standard BFS and DFS based solutions which you should know. Also, either of them can be used to find connected components in an undirected graph.

Topological Sorting [lets you find shortest/longest paths in a DAG in linear time, can be used to solve problems where there are some dependencies/prerequisites and you are required to find a valid ordering]

Strongly Connected Components of directed graph

Finding Euler Path

Dijkstra's Algorithm - for single source shortest path in weighted graph .

Bellman-Ford Algorithm - for single source shortest path in weighted graph with negative edges.

Floyd-Warshall algorithm - for all pair shortest path .

Kruskal and Prim's algorithms - for finding minimum spanning tree .

Max Network Flow (Ford-Fulkerson Algorithm)

Knapsack Problem

Zero-Sum and Alpha-Beta Search

5. Solve by using Data-Structures

You should know how to use Array, Hash table, (Single and Doubly) Linked List traversal, Pre/Post/In order traversal of Binary [Search] tree, Stack and Queue.

Many a times a problem can be solved by inserting the input into one of these data structures and traversing(or retrieving data from) it in certain way.

I found Stack interesting in another way. Most of the problems that are solved by simple recursion are also solvable using Stack and it is no surprise as in case of recursion the stack is hidden in the programming language implementation.

Let me conclude by saying that above list of algorithms and data structures is by no means exhaustive. They are just the most important and basic ones which you should know in great detail including their implementation and proofs.

In my opinion, Part-II(Problem Solving) of AIMA consists of very important algorithms and problem solving techniques. They are mostly related to Graph search but, you will see that there are a lot of problems that can be reduced to Graph search. One should work through at least that part of the book at some point in his life.

Another thing, you should be comfortable with, is basic calculations of computing (time/memory) complexity, the Big-O notation and methods of solving recurrence relations(which come up when computing complexity of any recursive algorithm).

Next level would be to understand theory of NP completeness(and awareness of known hard problems e.g. traveling-salesman-problem and their approximate solutions). You might want to know about advanced data structures such as BTree, KDTree, Trie etc and other graph algorithms. But these, I believe, you don't need to understand in great detail but just the usage. It is impossible to remember all the algorithms. However, you need to be "aware" of as many known data structures and algorithms as possible.

Lastly, This is a journey.. farther you go, better you get.

[Update: July 25' 2011] Sometimes writing precise statement of the problem also helps.

Basically it means to describe your problem under following headings...

Problem Description

Input

Output

Constraints

[Update: July 28' 2011] Sometimes problem is easier(or more efficient) to solve when the input is *Sorted*( or *preprocessed* in general) .

Also there can be problems where finding a *Signature* helps. For example problems related to anagrams of a string are usually solved by finding the signature that is the count of individual characters in the word or just the lexicographical sort of the word.

[Update: Feb 3' 2013] When solving an algorithmic problems. You should convince yourself, with [in]formal proofs, of 3 things (in worst cases)

1. Your algorithm will always terminate.

2. It will always produce the correct solution.

3. What is the worst case running time? (should be polynomial)

However, when solving some NP-complete problem, then 2 can be relaxed in the sense that algorithm is good enough as long as it either computes approximately good solution(if you're ok with it) or correct solution to specific instances of the general problem, that you are interested in. This relaxation is must because, with very high probability, there is no efficient(..polynomial time) solution to general problem you're solving.

0. Solve by bruteforce(or backtracking)

If the problem size is small and bruteforce approach is ok, then do it and not worry too much about the efficient solution. Make sure you do some back of the envelop runtime cost calculations before you disregard bruteforce. In some problems, you might need an intelligent algorithm to solve a sub-problem and then a bruteforce approach to combine sub-problem solutions. This is especially true for some of the problems in code competitions.

now on to the ways of finding efficient solutions....

1. Solve by Example or solving problem instances of small sizes, n = 1,2,3..

Here we solve some example inputs to the problem, e.g. if the problem input is a number n, then I will try to solve it manually for n = 1,2,3,4... and see if a solution clicks in my mind. This same approach can sometimes lead to solution involving recursion(and dynamic programming) described later.

2. Solve by Simplification

Here We try to remove/add some constraints from/to the problem that simplify it, solve the simpler version or a different but similar problem and see if it can be used to solve/understand the original problem.

3. Solve by Recursion

"Thinking Recursively" is an art. Best way to "get" recursion is to practice and read the literature on functional programming where recursion is one of the key concepts. I highly recommend working through SICP and the "wishful thinking" concept described there. For example, to get factorial(n) you will think like, make a wish that someone gives you the factorial(n-1) then how will you get factorial(n) using that of (n-1). Be wishful !!

One of the things I "got" over time is that, for recursion to work It is not necessary that

f(n) = g(f(n-1))

but all of the following could work also

f(n) = g(f(n-1),f(n-2))

f(n) = g(f(n-1),f(n-2),f(n-3))

....

f(n) = g(f(n-1),f(n-2),...f(1),f(0))

3.1 Divide and Conquer(for example standard solution to merge-sort) is another powerful problem solving technique which uses recursion. Here, you solve the original problem by "merging" the solutions of two or more disjoint sub-problems(which are solved recursively).

3.2 Dynamic Programming is an optimization to improve running time after you have a recursive strategy. Draw the recursion tree and see if same sub-problem is being solved multiple times. That is, you will notice that total number of distinct sub-problems to be solved is much less than the total number of sub-problems being solved in straightforward recursive implementation. (In cormen et al, it is called the overlapping sub-problems property).

In such cases, a lot of, redundant computation is saved by caching the solution of various sub-problems and reusing them when required again.

Solution can be implemented either top down recursive with a table to cache the sub-problem solutions or a bottom-up iterative where you solve the smaller sub-problems first and then solve the bigger using solution of smaller sub-problems.

Some problems solved by dynamic programming are optimization problems and they exhibit optimal substructure property, that basically means that optimal solution to bigger problem "contains" optimal solution to the smaller sub-problems.

Most of the time, proof by induction is used here to prove the correctness of algorithm.

4. Solve using Greedy approach

It is a algorithm technique where making locally optimal/correct decisions, *sometimes*, leads to globally optimal/correct solutions. Usually, you can get idea of the solution by solving small size instances of the problem. However, this idea may or may not be correct so it is *essential* to prove that your locally optimal strategy gives you globally optimal results too.

In most of the cases following proof strategies work for greedy algorithms..

- proof by induction

- proof by contradiction: it is sometimes combined with, proof by exchange argument. Here you assume some optimal solution S* and a solution S produced by your greedy algorithm. Using, exchange of elements in S* so that exchange either preserves the optimality score or makes it better, you transform S* to S and that essentially proves that S is either better or equally good as S*. And hence, your greedy strategy works.

- Anything else :)

5. Solve by Reduction

Here we try to reduce the given problem to another problem whose solution is well-known and many of the code challenges problems are of this nature. Some of the algorithms, which come up very frequently, and you should know them by heart(including their proof, and if you have problem reading proofs then you need to work through How to Prove it!). Knowing them also means having their concise/optimal implementation in your [muscle] memory.

Sorting Algorithms - Index Sort, Merge Sort, Quick Sort, Bucket Sort, Radix Sort .

Binary Search Algorithm .

Graph Algorithms:

Breadth First Search(BFS) - Its most popular usage is to find single source shortest path in unweighted graph .

Depth First Search(DFS) .

Note that, Checking Bipartiteness of an undirected graph have standard BFS and DFS based solutions which you should know. Also, either of them can be used to find connected components in an undirected graph.

Topological Sorting [lets you find shortest/longest paths in a DAG in linear time, can be used to solve problems where there are some dependencies/prerequisites and you are required to find a valid ordering]

Strongly Connected Components of directed graph

Finding Euler Path

Dijkstra's Algorithm - for single source shortest path in weighted graph .

Bellman-Ford Algorithm - for single source shortest path in weighted graph with negative edges.

Floyd-Warshall algorithm - for all pair shortest path .

Kruskal and Prim's algorithms - for finding minimum spanning tree .

Max Network Flow (Ford-Fulkerson Algorithm)

Knapsack Problem

Zero-Sum and Alpha-Beta Search

5. Solve by using Data-Structures

You should know how to use Array, Hash table, (Single and Doubly) Linked List traversal, Pre/Post/In order traversal of Binary [Search] tree, Stack and Queue.

Many a times a problem can be solved by inserting the input into one of these data structures and traversing(or retrieving data from) it in certain way.

I found Stack interesting in another way. Most of the problems that are solved by simple recursion are also solvable using Stack and it is no surprise as in case of recursion the stack is hidden in the programming language implementation.

Let me conclude by saying that above list of algorithms and data structures is by no means exhaustive. They are just the most important and basic ones which you should know in great detail including their implementation and proofs.

In my opinion, Part-II(Problem Solving) of AIMA consists of very important algorithms and problem solving techniques. They are mostly related to Graph search but, you will see that there are a lot of problems that can be reduced to Graph search. One should work through at least that part of the book at some point in his life.

Another thing, you should be comfortable with, is basic calculations of computing (time/memory) complexity, the Big-O notation and methods of solving recurrence relations(which come up when computing complexity of any recursive algorithm).

Next level would be to understand theory of NP completeness(and awareness of known hard problems e.g. traveling-salesman-problem and their approximate solutions). You might want to know about advanced data structures such as BTree, KDTree, Trie etc and other graph algorithms. But these, I believe, you don't need to understand in great detail but just the usage. It is impossible to remember all the algorithms. However, you need to be "aware" of as many known data structures and algorithms as possible.

Lastly, This is a journey.. farther you go, better you get.

[Update: July 25' 2011] Sometimes writing precise statement of the problem also helps.

Basically it means to describe your problem under following headings...

Problem Description

Input

Output

Constraints

[Update: July 28' 2011] Sometimes problem is easier(or more efficient) to solve when the input is *Sorted*( or *preprocessed* in general) .

Also there can be problems where finding a *Signature* helps. For example problems related to anagrams of a string are usually solved by finding the signature that is the count of individual characters in the word or just the lexicographical sort of the word.

[Update: Feb 3' 2013] When solving an algorithmic problems. You should convince yourself, with [in]formal proofs, of 3 things (in worst cases)

1. Your algorithm will always terminate.

2. It will always produce the correct solution.

3. What is the worst case running time? (should be polynomial)

However, when solving some NP-complete problem, then 2 can be relaxed in the sense that algorithm is good enough as long as it either computes approximately good solution(if you're ok with it) or correct solution to specific instances of the general problem, that you are interested in. This relaxation is must because, with very high probability, there is no efficient(..polynomial time) solution to general problem you're solving.

Sunday, June 26, 2011

Yahoo Summer School on IR - 2011

Last week, Yahoo Research organized a summer school on IR at IISC, Bangalore campus. This is the first time I visited IISC, the campus is lush green all around and add to it the nice weather.

Most of the sessions on classical IR were fairly detailed(but fast paced). A lot of the discussion assumed upfront knowledge of basic probability theory and linear algebra, which is kind of ok if you are attending a session on any advanced computer science material.

I could easily comprehend the sessions on Introduction to IR, IR Models(Boolean Retrieval, Vector space model, BIRM & BM25, IR based on language model), IR system quality evaluation and also how to create test collections, index creation with a bit of index compression techniques. Along the way, I followed the first half(up to chapter - 12, Language models for IR) of IIR book which covers more or less the same material covered in the mentioned sessions.

Then the sessions on web retrieval did a good job of describing the challenges faced in the web retrieval. It covered a very high level shape of web graph, architecture of web IR and crawlers. This also gave details about how pagerank is typically calculated from the web graph.

Then there were sessions on multimedia retrieval and learning to rank which tried to cover too much ground and I could only get a very high level outline of the area.

Overall it was a good effort and provided a very good introduction to the field of IR. I wish they had covered more on dynamic indexing as in the real world documents get added, deleted and modified every moment.

And, Yahoo research definitely deserves much appreciation for organizing this summer school.

References:

Most of the slides from sessions are available with Yahoo labs.

Modern Information Retrieval is written by one of the presenters in the summer school. It was cited multiple times and sessions on web retrieval are directly from the book chapters.

Introduction to Information Retrieval is another well known book by the author of famous Foundations of statistical natural language processing.

Most of the sessions on classical IR were fairly detailed(but fast paced). A lot of the discussion assumed upfront knowledge of basic probability theory and linear algebra, which is kind of ok if you are attending a session on any advanced computer science material.

I could easily comprehend the sessions on Introduction to IR, IR Models(Boolean Retrieval, Vector space model, BIRM & BM25, IR based on language model), IR system quality evaluation and also how to create test collections, index creation with a bit of index compression techniques. Along the way, I followed the first half(up to chapter - 12, Language models for IR) of IIR book which covers more or less the same material covered in the mentioned sessions.

Then the sessions on web retrieval did a good job of describing the challenges faced in the web retrieval. It covered a very high level shape of web graph, architecture of web IR and crawlers. This also gave details about how pagerank is typically calculated from the web graph.

Then there were sessions on multimedia retrieval and learning to rank which tried to cover too much ground and I could only get a very high level outline of the area.

Overall it was a good effort and provided a very good introduction to the field of IR. I wish they had covered more on dynamic indexing as in the real world documents get added, deleted and modified every moment.

And, Yahoo research definitely deserves much appreciation for organizing this summer school.

References:

Most of the slides from sessions are available with Yahoo labs.

Modern Information Retrieval is written by one of the presenters in the summer school. It was cited multiple times and sessions on web retrieval are directly from the book chapters.

Introduction to Information Retrieval is another well known book by the author of famous Foundations of statistical natural language processing.

Saturday, April 30, 2011

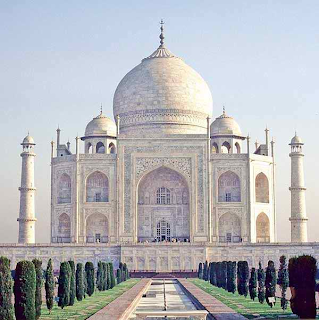

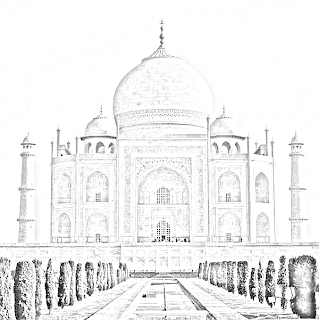

How I wrote [java]code to give Pencil Sketch effect to a picture

I am creating an application where I wanted to transform images to give them pencil sketch effect which can be easily obtained by photoshop as is described here.

However, I needed to automate the whole process in the code and certainly it is beyond me to look "inside" photoshop to see how it works(what are the algorithms/transformations etc and their implementations).

Anyway, from the article above it became clear that pseudocode looks like following:

s = Read-File-Into-Image("/path/to/image")

g = Convert-To-Gray-Scale(s)

i = Invert-Colors(g)

b = Apply-Gaussian-Blur(i)

result = Color-Dodge-Blend-Merge(b,g)

Now it was a matter of finding open source image-processing library that provided the operations I needed. By googling, I landed on JH Labs. They, freely, provide an image editor on which I tried to replicate the process described in photoshop article mentioned above and indeed I got the pencil sketch effect. Now, the best part is that image processing library behind JH Labs image editor is open source.

Then it was just a matter of coding the pseudocode using the JH labs library and here it is...

Here is how the image transform when I apply above code...

Notes:

- I used above code on png images. JH Labs code only works with TYPE_INT_ARGB(ref) images so you might need to transform them correctly.

- Code shown above was written to just check the concept and has no regards for performance, beauty.

Acknowledgement:

Well, to be honest, from being a alien to image-processing to getting to the code was not as linear a process as described in this post and I had to do a lot of hit n trial to get to this point. I want to thank Jerry who patiently answered my queries.

However, I needed to automate the whole process in the code and certainly it is beyond me to look "inside" photoshop to see how it works(what are the algorithms/transformations etc and their implementations).

Anyway, from the article above it became clear that pseudocode looks like following:

s = Read-File-Into-Image("/path/to/image")

g = Convert-To-Gray-Scale(s)

i = Invert-Colors(g)

b = Apply-Gaussian-Blur(i)

result = Color-Dodge-Blend-Merge(b,g)

Now it was a matter of finding open source image-processing library that provided the operations I needed. By googling, I landed on JH Labs. They, freely, provide an image editor on which I tried to replicate the process described in photoshop article mentioned above and indeed I got the pencil sketch effect. Now, the best part is that image processing library behind JH Labs image editor is open source.

Then it was just a matter of coding the pseudocode using the JH labs library and here it is...

BufferedImage src = null;

BufferedImage target = null;

src = ImageIO.read(new File("C:\\tmp\\ss.png"));

src = ImageUtils.convertImageToARGB(src);

//transformations begin=============

//gray scale

PointFilter grayScaleFilter = new GrayscaleFilter();

BufferedImage grayScale = new BufferedImage(src.getWidth(),src.getHeight(),src.getType());

grayScaleFilter.filter(src, grayScale);

//inverted gray scale

BufferedImage inverted = new BufferedImage(src.getWidth(),src.getHeight(),src.getType());

PointFilter invertFilter = new InvertFilter();

invertFilter.filter(grayScale,inverted);

//gaussian blurr

GaussianFilter gaussianFilter = new GaussianFilter(20);

BufferedImage gaussianFiltered = new BufferedImage(src.getWidth(),src.getHeight(),src.getType());

gaussianFilter.filter(inverted, gaussianFiltered);

//color dodge

ColorDodgeComposite cdc = new ColorDodgeComposite(1.0f);

CompositeContext cc = cdc.createContext(inverted.getColorModel(), grayScale.getColorModel(), null);

Raster invertedR = gaussianFiltered.getRaster();

Raster grayScaleR = grayScale.getRaster();

BufferedImage composite = new BufferedImage(src.getWidth(),src.getHeight(),src.getType());

WritableRaster colorDodgedR = composite.getRaster();

cc.compose(invertedR, grayScaleR , colorDodgedR);

//==================================

target = composite;

File outputfile = new File("C:\\tmp\\saved.png");

ImageIO.write(target, "png", outputfile);

Here is how the image transform when I apply above code...

Notes:

- I used above code on png images. JH Labs code only works with TYPE_INT_ARGB(ref) images so you might need to transform them correctly.

- Code shown above was written to just check the concept and has no regards for performance, beauty.

Acknowledgement:

Well, to be honest, from being a alien to image-processing to getting to the code was not as linear a process as described in this post and I had to do a lot of hit n trial to get to this point. I want to thank Jerry who patiently answered my queries.

Monday, April 18, 2011

How I revised Calculus

I have been following some stuff on machine learning and realized that over time I have forgotten some of the calculus stuff that I learned in college.

I mostly remembered single variable differentiation, integration, maxima/minima etc but could not recollect the precise meaning of limit, continuity, series convergence and also the taylor series and multivariable calculus stuff(partial derivatives, optimization of multivariable function, lagrange multipliers etc).

This note is the shortcut that I took to quickly recap all of the above.

First, I read following( relevant) chapters/sections from the Calculus book by Professor Gilbert Strang.

Section 2.6(Limits) and 2.7(Continuous Functions)

Chapter 10 (Infinite Series)

Chapter 11 (Vectors and Matrices)

Chapter 13 (Partial Derivatives)

I must say that the book is great and covers a lot of breadth maintaining enough depth. However, I could not fully grasp the contents of Chapter-13(Partial Derivatives), probably because of the 3d diagrams on 2d paper(or maybe I just could not pay enough attention).

In order to understand this chapter I followed all the video lectures on Partial Derivatives(Lecture-8 to Lecture-14) from MIT Multivariable calculus course. The video lectures are easier to follow and also explains diagrams done in applets which are much easier to understand. Also, the lecture notes give a very good summary of stuff covered in the lecture videos.

I mostly remembered single variable differentiation, integration, maxima/minima etc but could not recollect the precise meaning of limit, continuity, series convergence and also the taylor series and multivariable calculus stuff(partial derivatives, optimization of multivariable function, lagrange multipliers etc).

This note is the shortcut that I took to quickly recap all of the above.

First, I read following( relevant) chapters/sections from the Calculus book by Professor Gilbert Strang.

Section 2.6(Limits) and 2.7(Continuous Functions)

Chapter 10 (Infinite Series)

Chapter 11 (Vectors and Matrices)

Chapter 13 (Partial Derivatives)

I must say that the book is great and covers a lot of breadth maintaining enough depth. However, I could not fully grasp the contents of Chapter-13(Partial Derivatives), probably because of the 3d diagrams on 2d paper(or maybe I just could not pay enough attention).

In order to understand this chapter I followed all the video lectures on Partial Derivatives(Lecture-8 to Lecture-14) from MIT Multivariable calculus course. The video lectures are easier to follow and also explains diagrams done in applets which are much easier to understand. Also, the lecture notes give a very good summary of stuff covered in the lecture videos.

Wednesday, April 13, 2011

embedding activemq in jboss

Today, I worked on a prototype web application that utilized JMS. We decided to use ActiveMQ for this. Here are the things I had to do to get it running embedded inside Jboss.

First, I followed the process described in the doc "Integrating ActiveMQ with Jboss".

By default ActiveMQ uses KahaDB for persistence, but I wanted to use our oracle db infrastructure for this. A bit of googling got me to Introduction to ActiveMQ persistence and that led to JDBC data source configuration . By now I got the ActiveMQ successfully embedded in Jboss and running.

Next, Instead of giving all the oracle db info in the broker-config.xml, I wanted to get it from Jboss managed jdbc datasource(which is being used by other applications also). So, I have already got mydatasource-ds.xml deployed inside deploy/ folder of jboss which looks like following

In order to use above datasource, Here is what I had to put inside activemq-ra.rar/broker-config.xml file.

Well, above did not work. What happened was that Jboss was trying to start ActiveMQ before deploying mydatasource-ds.xml which resulted in JNDI NameNotFoundException(as java:/jdbc/mydatasource-ds is not available until mydatasource-ds.xml gets deployed). So, in ActiveMQ configuration, I had to declare dependency on mydatasource-ds(so that it gets deployed first). To do it, I created a file named jboss-dependency.xml inside activemq-ra.rar/META-INF/ and pasted following on it.

Now, We are good :).

BTW, above is by no means an exhaustive list because as we go, we will make a lot of changes for the actual setup but above should get a new person started with less amount of trouble than I had.

First, I followed the process described in the doc "Integrating ActiveMQ with Jboss".

By default ActiveMQ uses KahaDB for persistence, but I wanted to use our oracle db infrastructure for this. A bit of googling got me to Introduction to ActiveMQ persistence and that led to JDBC data source configuration . By now I got the ActiveMQ successfully embedded in Jboss and running.

Next, Instead of giving all the oracle db info in the broker-config.xml, I wanted to get it from Jboss managed jdbc datasource(which is being used by other applications also). So, I have already got mydatasource-ds.xml deployed inside deploy/ folder of jboss which looks like following

<datasources>

<local-tx-datasource>

<jndi-name>jdbc/mydatasource-ds</jndi-name>

<connection-url>jdbc:oracle:thin:oracle_user/passwordu@host:1521/sid</connection-url>

<driver-class>oracle.jdbc.driver.OracleDriver</driver-class>

<min-pool-size>1</min-pool-size>

<max-pool-size>20</max-pool-size>

<metadata>

<type-mapping>Oracle10g</type-mapping>

</metadata>

</local-tx-datasource>

</datasources>

In order to use above datasource, Here is what I had to put inside activemq-ra.rar/broker-config.xml file.

<beans ...>

<!-- shutdown hook is disabled as RAR classloader may be gone at shutdown -->

<broker xmlns="http://activemq.apache.org/schema/core" useJmx="true" useShutdownHook="false" brokerName="myactivemq.broker">

...

...

<persistenceAdapter>

<!-- kahaDB directory="activemq-data/kahadb"/ -->

<!--kahaDB directory="${jboss.server.data.dir}/activemq"/ -->

<jdbcPersistenceAdapter

dataDirectory="${jboss.server.data.dir}/activemq"

dataSource="#oracle-ds"/>

</persistenceAdapter>

...

</broker>

<bean id="oracle-ds" class="org.springframework.jndi.JndiObjectFactoryBean">

<property name="jndiName" value="java:jdbc/mydatasource-ds"></property>

</bean>

</beans>

Well, above did not work. What happened was that Jboss was trying to start ActiveMQ before deploying mydatasource-ds.xml which resulted in JNDI NameNotFoundException(as java:/jdbc/mydatasource-ds is not available until mydatasource-ds.xml gets deployed). So, in ActiveMQ configuration, I had to declare dependency on mydatasource-ds(so that it gets deployed first). To do it, I created a file named jboss-dependency.xml inside activemq-ra.rar/META-INF/ and pasted following on it.

<dependency xmlns="urn:jboss:dependency:1.0">

<item whenRequired="Real">jboss.jca:service=DataSourceBinding,name=jdbc/mydatasource-ds</item>

</dependency>

Now, We are good :).

BTW, above is by no means an exhaustive list because as we go, we will make a lot of changes for the actual setup but above should get a new person started with less amount of trouble than I had.

Thursday, April 7, 2011

konsole for windows

I am a person who loves to use linux for the bash shell, very very useful command line utilities and a myriad of other useful open source softwares(just one yum install .. away). But, for various constraints, my laptop at work has to have windows. First thing I do to fill the gap is to install cygwin, add it's bin/ folder to the PATH variable and install windows version of all the other open source softwares that I regularly use on linux.

This gets me very close except the cygwin terminal ui feel/experience is nowhere close to kde konsole. Also I have missed the multiple tabs feature of konsole for a long time. I never found a direct alternative of konsole in windows. Though there is one project, kde for windows, in progress which should bring the kde konsole port to windows.

But you don't have to wait any longer to get a konsole-like experience on windows. You should install console that has many konsole like features along with multiple tabs. Once you have installed it, open it and in the menu, go to Edit -> Settings. This opens the console settings window. Then give the path to bash exe from your cygwin installation. Also, click on Hotkeys settings link in the left column and change various key bindings to your liking to get an experience as close to konsole as you like.

Here is how the settings window looks like...

And, finally, here is my konsole for windows...

[Update: April 15'11] If you want to bind "clear" to clear the screen instead of using Ctrl-l for same then you need to create \$HOME/.inputrc file and save following on it.

"clear\r": '\C-l'

This gets me very close except the cygwin terminal ui feel/experience is nowhere close to kde konsole. Also I have missed the multiple tabs feature of konsole for a long time. I never found a direct alternative of konsole in windows. Though there is one project, kde for windows, in progress which should bring the kde konsole port to windows.

But you don't have to wait any longer to get a konsole-like experience on windows. You should install console that has many konsole like features along with multiple tabs. Once you have installed it, open it and in the menu, go to Edit -> Settings. This opens the console settings window. Then give the path to bash exe from your cygwin installation. Also, click on Hotkeys settings link in the left column and change various key bindings to your liking to get an experience as close to konsole as you like.

Here is how the settings window looks like...

And, finally, here is my konsole for windows...

[Update: April 15'11] If you want to bind "clear" to clear the screen instead of using Ctrl-l for same then you need to create \$HOME/.inputrc file and save following on it.

"clear\r": '\C-l'

Thursday, March 31, 2011

more: experiment with handwritten digit recognition and SVM

In case you haven't read, read the last post first.

In my last post, I wrote about a little experiment done on handwritten digit recognition using the WEKA explorer GUI. Now, this is the super simple scala script to do the same thing with code instead of gui.

On running above, this is what I get...

References:

Use WEKA in your Java Code

In my last post, I wrote about a little experiment done on handwritten digit recognition using the WEKA explorer GUI. Now, this is the super simple scala script to do the same thing with code instead of gui.

import weka.core.Instances

import weka.core.converters.ArffLoader.ArffReader

import weka.classifiers.{Classifier,Evaluation}

import weka.classifiers.functions.SMO

import java.io.{FileReader,BufferedReader}

object OCR {

def main(args : Array[String]) : Unit = {

val trainingSet = trainingInstances

evaluate(trainingSet,testInstances,svmClassifier(trainingSet))

}

def getInstances(src: String) = {

val reader = new BufferedReader(new FileReader(src))

val arffReader = new ArffReader(reader)

val instances = arffReader.getData()

instances.setClassIndex(instances.numAttributes() - 1)

instances //return the read instances

}

def trainingInstances = getInstances("\\path\\to\\optdigits.tra.arff")

def testInstances = getInstances("\\path\\to\\optdigits.tes.arff")

def svmClassifier(instances: Instances) = {

val smo = new SMO()

smo.buildClassifier(instances)

smo //return the trained multiclass SVM classifier

}

def evaluate(trainingSet: Instances, testSet: Instances, model: Classifier) {

val eval = new Evaluation(trainingSet)

eval.evaluateModel(model,testSet)

println(eval.toSummaryString("Results:\n", true))

}

}

On running above, this is what I get...

Results:

Correctly Classified Instances 1734 96.4942 %

Incorrectly Classified Instances 63 3.5058 %

Kappa statistic 0.961

K&B Relative Info Score 53500.7814 %

K&B Information Score 1777.1913 bits 0.989 bits/instance

Class complexity | order 0 5969.5443 bits 3.322 bits/instance

Class complexity | scheme 4192.4976 bits 2.3331 bits/instance

Complexity improvement (Sf) 1777.0468 bits 0.9889 bits/instance

Mean absolute error 0.1603

Root mean squared error 0.2721

Relative absolute error 89.0407 %

Root relative squared error 90.6884 %

Total Number of Instances 1797

References:

Use WEKA in your Java Code

Tuesday, March 29, 2011

experiment with handwritten digit recognition and SVM

For some time I have been working through various maths texts in order to build some base to understand machine learning algorithms. A lot of theory without application becomes a bit boring and I needed to take time off and do something different. So, I was looking to experiment with some well known problem in machine learning just for fun.

Last night I downloaded the handwritten character dataset from UCI machine learning repository, mainly the two files optdigits.tra(the training set) and optdigits.tes(the test set). The dataset basically contains 64 feature vectors(each integer ranging 0..16) and the class(the actual digit ranging 0..9). This is obtained by the process described on handwritten character dataset page..

Anyway, so essentially learning problem is to classify the class(0 to 9) from 64 feature vectors. I had heard somewhere that SVMs are state of the art way now a days for OCR(earlier it used to be Neural Networks) and decided to use SVM on the dataset.

I have some familiarity with WEKA , WEKA explorer makes it very easy to experiment with various machine learning algorithms. From here on, this post is very weka centric where I describe the steps I took to apply SVM to mentioned dataset.

First I converted both the dataset files from csv to weka ARFF format. I opened the csv files into excel and gave each column an unique name(as weka expects each feature vector to have a unique name). Then I saved the file back as csv again. Next, I loaded it into weka using the "Open File..." button on weka explorer "Preprocess" tab. Then I saved the loaded dataset as ARFF file. It needed only one more change. I opened the ARFF file on text editor and changed the type of last(column that represented the actual digit) attribute from numeric to nominal as follow(so that weka could apply classification algorithms to it)...

@attribute @@class@@ {0,1,2,3,4,5,6,7,8,9}

I am making both the ARFF files available if you want to skip above steps.

optdigits.tra.arff

optdigits.tes.arff

Next, I loaded optdigits.tra.arff from weka explorer using "Open File.." button. (I have highlighted the part showing # of instances that is the number of training examples)

Next, on the weka explorer, go to "Classify" tab and click on "Choose" button to select SMO as shown in the image below(If you click next to "Choose" button on the command written, it will open up a screen that tells that SMO is the the sequential minimal optimization algorithm for training support vector classifier)

Next, to load the test dataset, we select the "Supplied test set" radio button, click on "Set..." button and use the wizard to load test dataset file.

Then we click on the "Start" button that builds the model(..the classifier), runs it on the test dataset and shows the evaluation results as below.

It is interesting to see that we could get about 96.5% correct classifications with such ease :).

References:

Handwritten Character Dataset

Weka and the Weka Book for practical introduction to machine learning and Weka

Note: This experiment was done with weka version 3.6.4

Last night I downloaded the handwritten character dataset from UCI machine learning repository, mainly the two files optdigits.tra(the training set) and optdigits.tes(the test set). The dataset basically contains 64 feature vectors(each integer ranging 0..16) and the class(the actual digit ranging 0..9). This is obtained by the process described on handwritten character dataset page..

..We used preprocessing programs made available by NIST to extract normalized bitmaps of handwritten digits from a preprinted form. From a total of 43 people, 30 contributed to the training set and different 13 to the test set. 32x32 bitmaps are divided into nonoverlapping blocks of 4x4 and the number of on pixels are counted in each block. This generates an input matrix of 8x8 where each element is an integer in the range 0..16. This reduces dimensionality and gives invariance to small distortions..

Anyway, so essentially learning problem is to classify the class(0 to 9) from 64 feature vectors. I had heard somewhere that SVMs are state of the art way now a days for OCR(earlier it used to be Neural Networks) and decided to use SVM on the dataset.

I have some familiarity with WEKA , WEKA explorer makes it very easy to experiment with various machine learning algorithms. From here on, this post is very weka centric where I describe the steps I took to apply SVM to mentioned dataset.

First I converted both the dataset files from csv to weka ARFF format. I opened the csv files into excel and gave each column an unique name(as weka expects each feature vector to have a unique name). Then I saved the file back as csv again. Next, I loaded it into weka using the "Open File..." button on weka explorer "Preprocess" tab. Then I saved the loaded dataset as ARFF file. It needed only one more change. I opened the ARFF file on text editor and changed the type of last(column that represented the actual digit) attribute from numeric to nominal as follow(so that weka could apply classification algorithms to it)...

@attribute @@class@@ {0,1,2,3,4,5,6,7,8,9}

I am making both the ARFF files available if you want to skip above steps.

optdigits.tra.arff

optdigits.tes.arff

Next, I loaded optdigits.tra.arff from weka explorer using "Open File.." button. (I have highlighted the part showing # of instances that is the number of training examples)

Next, on the weka explorer, go to "Classify" tab and click on "Choose" button to select SMO as shown in the image below(If you click next to "Choose" button on the command written, it will open up a screen that tells that SMO is the the sequential minimal optimization algorithm for training support vector classifier)

Next, to load the test dataset, we select the "Supplied test set" radio button, click on "Set..." button and use the wizard to load test dataset file.

Then we click on the "Start" button that builds the model(..the classifier), runs it on the test dataset and shows the evaluation results as below.

=== Evaluation on test set ===

=== Summary ===

Correctly Classified Instances 1734 96.4942 %

Incorrectly Classified Instances 63 3.5058 %

Kappa statistic 0.961

Mean absolute error 0.1603

Root mean squared error 0.2721

Relative absolute error 89.0407 %

Root relative squared error 90.6884 %

Total Number of Instances 1797

=== Detailed Accuracy By Class ===

TP Rate FP Rate Precision Recall F-Measure ROC Area Class

0.994 0.001 0.989 0.994 0.992 1 0

0.984 0.01 0.918 0.984 0.95 0.996 1

0.96 0.003 0.971 0.96 0.966 0.993 2

0.934 0.002 0.983 0.934 0.958 0.987 3

0.994 0.003 0.973 0.994 0.984 0.997 4

0.989 0.008 0.933 0.989 0.96 0.996 5

0.983 0.001 0.994 0.983 0.989 0.999 6

0.922 0.002 0.982 0.922 0.951 0.998 7

0.943 0.004 0.965 0.943 0.953 0.982 8

0.944 0.006 0.95 0.944 0.947 0.991 9

Weighted Avg. 0.965 0.004 0.966 0.965 0.965 0.994

=== Confusion Matrix ===

a b c d e f g h i j <-- classified as

177 0 0 0 1 0 0 0 0 0 | a = 0

0 179 0 0 0 0 1 0 1 1 | b = 1

0 7 170 0 0 0 0 0 0 0 | c = 2

1 1 4 171 0 2 0 3 1 0 | d = 3

0 0 0 0 180 0 0 0 1 0 | e = 4

0 0 1 0 0 180 0 0 0 1 | f = 5

1 0 0 0 1 0 178 0 1 0 | g = 6

0 0 0 0 1 7 0 165 1 5 | h = 7

0 7 0 0 0 1 0 0 164 2 | i = 8

0 1 0 3 2 3 0 0 1 170 | j = 9

It is interesting to see that we could get about 96.5% correct classifications with such ease :).

References:

Handwritten Character Dataset

Weka and the Weka Book for practical introduction to machine learning and Weka

Note: This experiment was done with weka version 3.6.4

Thursday, March 10, 2011

concurrency concepts in databases and hibernate

I've read about this multiple times but often forget the details as it is not often that I need to work on it in my applications. So, here I am taking quick notes for faster recap of it later.

First thing to know is what are the issues that can happen by multiple concurrent transactions if no preventive measures are taken.

lost update : I have found different meanings to this term. The hibernate book describes this as follow. One transaction commits its modifications and another one rolls back resulting undoing of the updates done by the other transaction.

However at many other places lost-update has the meaning that of second-lost-update described later. And, ANSI SQL-92 standard does not seem to talk about it.

dirty read : One transaction fetches a record and modifies it. Another transaction fetches the same record and gets the modifications made by first transaction even if they are not committed.

unrepeatable read : One transaction reads some record, another transaction commits some modifications to same record, first transaction reads the same record again and finds it different from what was read previously.

One special case of unrepeatable read is the second lost updates problem. Let say, One transaction reads some record and modifies it, another transaction reads same record and modifies it. First one commits its modifications and then the second commits and modifications made by first transaction are lost.

phantom read : One transaction reads a list of records by some filter criteria. Another transaction starts, inserts some records that fulfill the filter criteria used by first transaction and commits. First transaction reads same list again by same filter criteria but ends up getting a different set of records this time.

Now, to overcome above issues ANSI standard defines following transaction isolation levels.

read uncommitted : this permits all 3.. dirty read, unrepeatable read and phantom reads.

read committed : this does not permit dirty reads but allows unrepeatable as well as phantom reads.

repeatable read : this does not permit dirty and unrepeatable reads but allows phantom reads.

serializable : this is the strictest transaction isolation which simply does not seem to allow concurrent transactions. this again is usually not used in typical applications due to being too restrictive and badly performing. This does not allow any of dirty read, unrepeatable read or phantom reads.

exact implementation of above isolation levels varies significantly among the vendors. One has to consult the docs of particular db to understand the impact of each isolation level to performance and scalability.

In Hibernate, by default, every JDBC connection to a database is in the default isolation level of the DBMS, usually read-committed or repeatable-read. You can change this by explicitly setting hibernate.connection.isolation property. Then hibernate will set the mentioned isolation level to each new JDBC connection(note that it will not change the default isolation level of your db).

Optimistic concurrency control:

An optimistic approach assumes that everything will be OK and any conflicts are detected only in the end when data is written/flushed to the db.

Multi-user applications usually default to optimistic concurrency control and database connections with a read-committed isolation level.

Let say the isolation level is set to read-committed. Transaction A and B start at the same time and both read and modify the same record(they can not see each other's changes as dirty read is not permitted in read-committed isolation level). Transaction A commits and then B does. Then one of the following 3 things can happen.

last commit wins : both transactions commit successfully and B overrides any modifications done by A and no error raised.

first commit wins : when second transaction(B) is committed, conflict is detected and error is raised.

merge conflicting updates : conflicts are detected and one interactive dialogue helps resolving those conflicts

With hibernate, you get last-commit-wins by default. You can enable first-commit-wins strategy by enabling optimistic concurrency control. This needs versioning enabled for entities .

In the end, if you need more fine grained control on locking then you can use variety of "SELECT ... FOR UPDATE ..." statements by using LockMode.UPGRADE et al. This is called explicit pessimistic locking.

Reference:

Chapter-9: Transactions and Concurrency in the book "Java Persistence with Hibernate".

ANSI SQL-92 Standard Spec

First thing to know is what are the issues that can happen by multiple concurrent transactions if no preventive measures are taken.

lost update : I have found different meanings to this term. The hibernate book describes this as follow. One transaction commits its modifications and another one rolls back resulting undoing of the updates done by the other transaction.

However at many other places lost-update has the meaning that of second-lost-update described later. And, ANSI SQL-92 standard does not seem to talk about it.

dirty read : One transaction fetches a record and modifies it. Another transaction fetches the same record and gets the modifications made by first transaction even if they are not committed.

unrepeatable read : One transaction reads some record, another transaction commits some modifications to same record, first transaction reads the same record again and finds it different from what was read previously.

One special case of unrepeatable read is the second lost updates problem. Let say, One transaction reads some record and modifies it, another transaction reads same record and modifies it. First one commits its modifications and then the second commits and modifications made by first transaction are lost.

phantom read : One transaction reads a list of records by some filter criteria. Another transaction starts, inserts some records that fulfill the filter criteria used by first transaction and commits. First transaction reads same list again by same filter criteria but ends up getting a different set of records this time.

Now, to overcome above issues ANSI standard defines following transaction isolation levels.

read uncommitted : this permits all 3.. dirty read, unrepeatable read and phantom reads.

read committed : this does not permit dirty reads but allows unrepeatable as well as phantom reads.

repeatable read : this does not permit dirty and unrepeatable reads but allows phantom reads.

serializable : this is the strictest transaction isolation which simply does not seem to allow concurrent transactions. this again is usually not used in typical applications due to being too restrictive and badly performing. This does not allow any of dirty read, unrepeatable read or phantom reads.

exact implementation of above isolation levels varies significantly among the vendors. One has to consult the docs of particular db to understand the impact of each isolation level to performance and scalability.

In Hibernate, by default, every JDBC connection to a database is in the default isolation level of the DBMS, usually read-committed or repeatable-read. You can change this by explicitly setting hibernate.connection.isolation property. Then hibernate will set the mentioned isolation level to each new JDBC connection(note that it will not change the default isolation level of your db).

Optimistic concurrency control:

An optimistic approach assumes that everything will be OK and any conflicts are detected only in the end when data is written/flushed to the db.

Multi-user applications usually default to optimistic concurrency control and database connections with a read-committed isolation level.

Let say the isolation level is set to read-committed. Transaction A and B start at the same time and both read and modify the same record(they can not see each other's changes as dirty read is not permitted in read-committed isolation level). Transaction A commits and then B does. Then one of the following 3 things can happen.

last commit wins : both transactions commit successfully and B overrides any modifications done by A and no error raised.

first commit wins : when second transaction(B) is committed, conflict is detected and error is raised.

merge conflicting updates : conflicts are detected and one interactive dialogue helps resolving those conflicts

With hibernate, you get last-commit-wins by default. You can enable first-commit-wins strategy by enabling optimistic concurrency control. This needs versioning enabled for entities .

In the end, if you need more fine grained control on locking then you can use variety of "SELECT ... FOR UPDATE ..." statements by using LockMode.UPGRADE et al. This is called explicit pessimistic locking.

Reference:

Chapter-9: Transactions and Concurrency in the book "Java Persistence with Hibernate".

ANSI SQL-92 Standard Spec

Sunday, February 27, 2011

OCW-18.443: problem-set#4

My solutions to 4th problem set in MIT-Statistics for Applications course.

You should read the handout given with this assignment as same material is not covered in the text book.

1. We can simply use the known(derived in exp-8.4.3 of "Mathematical Statistics and Data Analysis") formulae. Here is the R-terminal interaction...

> data = c(0.312,0.238,0.446,0.968,0.576,0.471,0.596)

>

> estimated_variance = var(data)

> estimated_mean = mean(data)

>

> estimated_lambda = estimated_mean / estimated_variance

> estimated_lambda

[1] 9.089924

>

> estimated_alpha = (estimated_mean * estimated_mean)/estimated_variance

> estimated_alpha

[1] 4.683908

>

>

2. Let T(X) be an unbiased estimator of $p^2$

Then E[T(X)] = $p^2$

=> T(0)P(X=0) + T(1)P(X=1) + T(2)P(X=2) = $p^2$

=> ${(1-p)}^2$T(0) + 2p(1-p)T(1) + $p^2$T(2) = $p^2$

...

...

=> $p^2$(T(0) - 2T(1) + T(2) - 1) + 2p(T(1) - T(0)) + T(0) = 0

For above to be true for all values of p in [0,1], we have

T(0) - 2T(1) + T(2) - 1 = 0

T(1) - T(0) = 0

T(0) = 0

On solving above we get

T(0) = 0

T(1) = 0

T(2) = 1

This is the unbiased estimator of $p^2$. Most surprising part of above estimator is that it estimates $p^2$ to be 0 even if X = 1 that is there was one success(and hence p should not be 0)

3.

4.

You should read the handout given with this assignment as same material is not covered in the text book.

1. We can simply use the known(derived in exp-8.4.3 of "Mathematical Statistics and Data Analysis") formulae. Here is the R-terminal interaction...

> data = c(0.312,0.238,0.446,0.968,0.576,0.471,0.596)

>

> estimated_variance = var(data)

> estimated_mean = mean(data)

>

> estimated_lambda = estimated_mean / estimated_variance

> estimated_lambda

[1] 9.089924

>

> estimated_alpha = (estimated_mean * estimated_mean)/estimated_variance

> estimated_alpha

[1] 4.683908

>

>

2. Let T(X) be an unbiased estimator of $p^2$

Then E[T(X)] = $p^2$

=> T(0)P(X=0) + T(1)P(X=1) + T(2)P(X=2) = $p^2$

=> ${(1-p)}^2$T(0) + 2p(1-p)T(1) + $p^2$T(2) = $p^2$

...

...

=> $p^2$(T(0) - 2T(1) + T(2) - 1) + 2p(T(1) - T(0)) + T(0) = 0

For above to be true for all values of p in [0,1], we have

T(0) - 2T(1) + T(2) - 1 = 0

T(1) - T(0) = 0

T(0) = 0

On solving above we get

T(0) = 0

T(1) = 0

T(2) = 1

This is the unbiased estimator of $p^2$. Most surprising part of above estimator is that it estimates $p^2$ to be 0 even if X = 1 that is there was one success(and hence p should not be 0)

3.

4.

Saturday, February 26, 2011

OCW-18.443: problem-set#3

My solutions to 3rd problem set in MIT-Statistics for Applications course.

Most of the solutions are done using R(version 2.12.1).

Note: In this problem set, if you don't understand the meaning of y-on-x, x-on-y and bfsd regression, then you should read the handouts given for this assignment.

1(a)

y - distance

x - year

years are constant and also not measured using some instrument(and hence no chances of error), so clearly year is not a random variable. Hence the most suitable regression here is y-on-x.

1(b) Here is the R-terminal interaction.

>

> year = c(1952,1956,1960,1964,1968,1972,1976,1980,1984)

> distance = c(758.3,784.4,813.6,808.5,891.9,825.7,835.9,855.6,855.6)

>

> plot(year,distance) # draw distance(y-axis) vs year(x-axis) plot

>

>

> #clearly (1968,891.9) is an outlier, otherwise it looks close to linear

>

> linmodel = lm(distance ~ year) #create the linear model

> residuals = resid(linmodel); residuals #find and print the residuals

1 2 3 4 5 6 7

-22.893333 -7.870000 10.253333 -5.923333 66.400000 -10.876667 -11.753333

8 9

-3.130000 -14.206667

>

> plot(year, residuals) #plot residuals vs year

>

1(c) I notice that 7 out of 9 residuals are negative. And, actually there seems to be a pattern.

2(a)

y - power output

x - wind speed

If we do y-on-x regression, prediction for power output at sufficiently small speeds comes negative which is not possible. Also, no one will be interested in predicting wind speed from power output(as naturally power is being produced by wind and not viceversa) :).

2(b)

First, let us find the correlation coefficients for all 10 values using R. Here it is

>

> output = c(0.123,0.558,1.194,1.582,1.822,1.800,2.166,2.303,2.386,2.236)

>

> speed = c(2.45,3.05,4.10,5.00,6.00,7.00,8.15,9.10,9.70,10.00)

>

> cor(output,speed)

[1] 0.9419852

>

Now, let us find the correlation for 7 values where windspeeds vary from 3 to 9.1 mph.

>

> cor(output[2:8],speed[2:8])

[1] 0.951478

>

Now, let us do the y-on-x regression for the 7 values.

>

> speed = speed[2:8]

> output = output[2:8]

>

> linmodel = lm(output ~ speed) #build the model

> coeffs = coefficients(linmodel)

>

> #Plot the output vs speed along with fitted line

> plot(speed,output,xlab="Wind Speed",ylab="Power Output")

> abline(coeffs[1],coeffs[2])

>

3. Again, I solved it using R. Here is R-terminal interaction.

> height = c(5000,10000,15000,20000,25000,30000,35000,40000,45000,50000,

55000,60000,70000,80000,90000,100000)

>

> pressure = c(632.50,522.70,429.00,349.50,282.40,226.10,179.30,141.20,

111.10,87.80,68.90,54.20,33.70,21.00,13.20,8.36)

>

> plot(height,pressure)

> #clearly it does not look linear so we can expect some pattern in residuals

>

>

> linmodel = lm(pressure ~ height)

>

> coeffs = coefficients(linmodel)

> coeffs[1] #Intercept

(Intercept)

471.6105

> coeffs[2] #slope

height

-0.006006586

>

> cor(height,pressure) #correlation

[1] -0.885868

>

> residuals = resid(linmodel)

>

> residuals

1 2 3 4 5 6 7

190.922430 111.155362 47.488294 -1.978775 -39.045843 -65.312911 -82.079980

8 9 10 11 12 13 14

-90.147048 -90.214116 -83.481185 -72.348253 -57.015322 -17.449458 29.916405

15 16

82.182268 137.408132

>

> plot(height,residuals)

Clearly residuals vs height plot shows a pattern and as expected data does not fit linear model.

4. We are using height, pressure from problem#3

>

> squared_height = height * height

> squared_height

[1] 2.500e+07 1.000e+08 2.250e+08 4.000e+08 6.250e+08 9.000e+08 1.225e+09

[8] 1.600e+09 2.025e+09 2.500e+09 3.025e+09 3.600e+09 4.900e+09 6.400e+09

[15] 8.100e+09 1.000e+10

>

> plot(squared_height,pressure)

> #clearly this does not look linear either, so we can expect pattern in residuals

>

> linmodel = lm(pressure ~ squared_height)

>

> coeffs = coefficients(linmodel)

> coeffs[1] #Intercept

(Intercept)

332.7193

> coeffs[2] #slope

squared_height

-4.737236e-08

>

> cor(squared_height,pressure) #correlation

[1] -0.7394696

>

> residuals = resid(linmodel)

>

> residuals

1 2 3 4 5 6

300.965031 194.717958 106.939504 35.729667 -20.711551 -63.984150

7 8 9 10 11 12

-95.388132 -115.723495 -125.690240 -126.488366 -120.517875 -107.978765

13 14 15 16

-66.894691 -8.536143 64.196877 149.364370

>

> plot(squared_height,residuals)

Clearly residuals vs height plot shows a pattern.

5. Again, the R-terminal interaction is here...

> ln_pressure = log(pressure)

> ln_pressure

[1] 6.449680 6.259008 6.061457 5.856504 5.643325 5.420977 5.189060 4.950177

[9] 4.710431 4.475062 4.232656 3.992681 3.517498 3.044522 2.580217 2.123458

>

> plot(height,ln_pressure)

> #clearly its linear, so there shouldn't be any pattern in residuals

>

> linmodel = lm(ln_pressure ~ height)

>

> coeffs = coefficients(linmodel)

> coeffs[1] #Intercept

(Intercept)

6.76613

> coeffs[2] #slope

height

-4.623476e-05

>

> cor(ln_pressure,height) #correlation

[1] -0.9996577

>

> residuals = resid(linmodel)

> residuals

1 2 3 4 5

-0.0852764784 -0.0447752103 -0.0111521741 0.0150682717 0.0330630186

6 7 8 9 10

0.0418896953 0.0411464997 0.0334372471 0.0248644217 0.0206690287

11 12 13 14 15

0.0094375091 0.0006360425 -0.0121994225 -0.0228272162 -0.0247852183

16

-0.0191960148

>

> plot(height,residuals)

Though plot shows some pattern but residual absolute values are tiny compared to those in problem#3 and problem#4. However to be sure that our model is correct. Let us plot the data points and fitted line together and see that it fits well

>

> plot(height,ln_pressure)

> abline(6.76613,-0.00004623476)

Most of the solutions are done using R(version 2.12.1).

Note: In this problem set, if you don't understand the meaning of y-on-x, x-on-y and bfsd regression, then you should read the handouts given for this assignment.

1(a)

y - distance

x - year

years are constant and also not measured using some instrument(and hence no chances of error), so clearly year is not a random variable. Hence the most suitable regression here is y-on-x.

1(b) Here is the R-terminal interaction.

>

> year = c(1952,1956,1960,1964,1968,1972,1976,1980,1984)

> distance = c(758.3,784.4,813.6,808.5,891.9,825.7,835.9,855.6,855.6)

>

> plot(year,distance) # draw distance(y-axis) vs year(x-axis) plot

>

>

> #clearly (1968,891.9) is an outlier, otherwise it looks close to linear

>

> linmodel = lm(distance ~ year) #create the linear model

> residuals = resid(linmodel); residuals #find and print the residuals

1 2 3 4 5 6 7

-22.893333 -7.870000 10.253333 -5.923333 66.400000 -10.876667 -11.753333

8 9

-3.130000 -14.206667

>

> plot(year, residuals) #plot residuals vs year

>

1(c) I notice that 7 out of 9 residuals are negative. And, actually there seems to be a pattern.

2(a)

y - power output

x - wind speed

If we do y-on-x regression, prediction for power output at sufficiently small speeds comes negative which is not possible. Also, no one will be interested in predicting wind speed from power output(as naturally power is being produced by wind and not viceversa) :).

2(b)

First, let us find the correlation coefficients for all 10 values using R. Here it is

>

> output = c(0.123,0.558,1.194,1.582,1.822,1.800,2.166,2.303,2.386,2.236)

>

> speed = c(2.45,3.05,4.10,5.00,6.00,7.00,8.15,9.10,9.70,10.00)

>

> cor(output,speed)

[1] 0.9419852

>

Now, let us find the correlation for 7 values where windspeeds vary from 3 to 9.1 mph.

>

> cor(output[2:8],speed[2:8])

[1] 0.951478

>

Now, let us do the y-on-x regression for the 7 values.

>

> speed = speed[2:8]

> output = output[2:8]

>

> linmodel = lm(output ~ speed) #build the model

> coeffs = coefficients(linmodel)

>

> #Plot the output vs speed along with fitted line

> plot(speed,output,xlab="Wind Speed",ylab="Power Output")

> abline(coeffs[1],coeffs[2])

>

3. Again, I solved it using R. Here is R-terminal interaction.

> height = c(5000,10000,15000,20000,25000,30000,35000,40000,45000,50000,

55000,60000,70000,80000,90000,100000)

>

> pressure = c(632.50,522.70,429.00,349.50,282.40,226.10,179.30,141.20,

111.10,87.80,68.90,54.20,33.70,21.00,13.20,8.36)

>

> plot(height,pressure)

> #clearly it does not look linear so we can expect some pattern in residuals

>

>

> linmodel = lm(pressure ~ height)

>

> coeffs = coefficients(linmodel)

> coeffs[1] #Intercept

(Intercept)

471.6105

> coeffs[2] #slope

height

-0.006006586

>

> cor(height,pressure) #correlation

[1] -0.885868

>

> residuals = resid(linmodel)

>

> residuals

1 2 3 4 5 6 7

190.922430 111.155362 47.488294 -1.978775 -39.045843 -65.312911 -82.079980

8 9 10 11 12 13 14

-90.147048 -90.214116 -83.481185 -72.348253 -57.015322 -17.449458 29.916405

15 16

82.182268 137.408132

>

> plot(height,residuals)

Clearly residuals vs height plot shows a pattern and as expected data does not fit linear model.

4. We are using height, pressure from problem#3

>

> squared_height = height * height

> squared_height

[1] 2.500e+07 1.000e+08 2.250e+08 4.000e+08 6.250e+08 9.000e+08 1.225e+09

[8] 1.600e+09 2.025e+09 2.500e+09 3.025e+09 3.600e+09 4.900e+09 6.400e+09

[15] 8.100e+09 1.000e+10

>

> plot(squared_height,pressure)

> #clearly this does not look linear either, so we can expect pattern in residuals

>

> linmodel = lm(pressure ~ squared_height)

>

> coeffs = coefficients(linmodel)

> coeffs[1] #Intercept

(Intercept)

332.7193

> coeffs[2] #slope

squared_height

-4.737236e-08

>

> cor(squared_height,pressure) #correlation

[1] -0.7394696

>

> residuals = resid(linmodel)

>

> residuals

1 2 3 4 5 6

300.965031 194.717958 106.939504 35.729667 -20.711551 -63.984150

7 8 9 10 11 12

-95.388132 -115.723495 -125.690240 -126.488366 -120.517875 -107.978765

13 14 15 16

-66.894691 -8.536143 64.196877 149.364370

>

> plot(squared_height,residuals)

Clearly residuals vs height plot shows a pattern.

5. Again, the R-terminal interaction is here...

> ln_pressure = log(pressure)

> ln_pressure

[1] 6.449680 6.259008 6.061457 5.856504 5.643325 5.420977 5.189060 4.950177

[9] 4.710431 4.475062 4.232656 3.992681 3.517498 3.044522 2.580217 2.123458

>

> plot(height,ln_pressure)

> #clearly its linear, so there shouldn't be any pattern in residuals

>

> linmodel = lm(ln_pressure ~ height)

>

> coeffs = coefficients(linmodel)

> coeffs[1] #Intercept

(Intercept)

6.76613

> coeffs[2] #slope

height

-4.623476e-05

>

> cor(ln_pressure,height) #correlation

[1] -0.9996577

>

> residuals = resid(linmodel)

> residuals

1 2 3 4 5

-0.0852764784 -0.0447752103 -0.0111521741 0.0150682717 0.0330630186

6 7 8 9 10

0.0418896953 0.0411464997 0.0334372471 0.0248644217 0.0206690287

11 12 13 14 15

0.0094375091 0.0006360425 -0.0121994225 -0.0228272162 -0.0247852183

16

-0.0191960148

>

> plot(height,residuals)

Though plot shows some pattern but residual absolute values are tiny compared to those in problem#3 and problem#4. However to be sure that our model is correct. Let us plot the data points and fitted line together and see that it fits well

>

> plot(height,ln_pressure)

> abline(6.76613,-0.00004623476)

Thursday, February 24, 2011

OCW-18.443: problem-set#1

My solutions to 1st problem set in MIT-Statistics for Applications course.

Some of the solutions are done using R(version 2.12.1).

Also, they use following function that prints out the confidence interval for mean given the sample mean, estimated standard deviation, percentage and size of the sample.

1(a) Sum of independent normal random variables is another normal random variable. So sample mean(Xbar) is also normal random variable with

E[Xbar] = 0 and

Var(Xbar) = 1/n = 1/49

So, (Xbar - E[Xbar])/$\sqrt{Var(Xbar)}$ is approximately N(0,1). That is, 7Xbar is N(0,1).

Now, P(|Xbar| < c) = 0.8

=> P(-c < Xbar < c) = 0.8

=> P(-7c < 7Xbar < 7c) = 0.8

=> P(-7c < Z < 7c) = 0.8 , where Z is standard normal random variable

=> 2F(7c) - 1 = 0.8 , where F is CDF of standard normal random variable

=> F(7c) = 0.9

From the table of CDF of standard normal distribution we can see that F(1.28) = .8997

So approximately 7c = 1.28

=> c = 0.183

1(b) T follows a t distribution with 4 degrees of freedom.

(i) P(|T| < t0) = 0.9

=> P(-t0 < T < t0) = 0.9

=> 2P(T < t0) - 1 = 0.9

=> P(T < t0) = 0.95

by looking at the quantiles of t-distribution table, we can check that t0 = 2.132

(ii) P(T > t0) = 0.05

=> 1 - P(T < t0) = 0.05

=> P(T < t0) = 0.95

so again, t0 = 2.132

2. We will solve this with R. Here is the R terminal interaction:

> data = c(6.6729,6.6735,6.6873,6.6699,6.6742,6.6830,6.6754) #loading the data

>

> mean(data) #2(a)

[1] 6.6766

>

> sd(data) #2(b)

[1] 0.006202688

>

> (6.6766 - 6.1)/0.006202688 #2(c)

[1] 92.9597

>

> n_conf_interval_mean(6.6766,0.006202688,95,7) #2(d)

[1] "Required interval is (6.672005,6.681195)."

>

3(a).

$\sigma^2$ can be estimated by

$S^2$ = $\frac{1}{n-1}\sum_{i=1}^n {(X_i - Xbar)}^2$

Also, $\frac{(n-1)S^2}{\sigma^2}$ has chi-square distribution with n-1 degrees of freedom.

Let $F_m(\alpha)$ denote the point beyond which the chi-square distribution with m degrees of freedom has probability $\alpha$. That is, $F_m(\alpha)$ is (1-$\alpha$)th quantile of chi-square distribution with m degrees of freedom.

Then it can be derived(a similar derivation is done in Example-8.5.6 in book " Mathematical Statistics and Data Analysis ") that

(1-$\alpha$)100 % confidence interval for $\sigma^2$ is

($\frac{(n-1)S^2}{F_{n-1}(\alpha/2)}$ , $\frac{(n-1)S^2}{F_{n-1}(1 - \alpha/2)}$)